Table of contents

No headings in the article.

There are two things in this world that I don't understand Serverless and Serverless. And part of that could be for me, just like not having a lot of experience with it because every time I dip my toe in the serverless water, it's just freezing cold and I'm just not wanting to get started with it. From the outside, Serverless looks very attractive. I give it at least an eight out of a ten. And the part that I find most beautiful is that it can auto-scale to exactly the load you have.

And you don't even have to think about servers. If you have 20 people using your app on a daily basis and then all of a sudden your app just blows up on Reddit and now 20,000 people are using your app, no problem, everything works just fine. As more requests just like surge in there, you're going to have functions pop up left and right to handle all of them for you and you only pay for the number of calls that actually happen. We'll just ignore the fact that your database died after 20 seconds under that load because you're on RDS free tier. In theory, this sounds fantastic, which is why I've been waiting for an excuse to try something with Serverless that's a little bit more serious.

And the day finally came around where I wanted to switch how I was dynamically resizing images, like where you pass like 300 width and 600 height to an image endpoint like https://img.something.com/id?width=300&height=600 and it will actually resize the image on the fly before it sends it back to your browser. I wanted that and I wanted that "serverlessly", or at least I thought it might be interesting to try with Serverless. And I had seen something that AWS has where you can deploy a serverless image handler for you and it does exactly what I want. The only thing is I avoid AWS like the plague. So I made the mistake of trying Google Cloud.

I know I should have known better, but you know what? You live, you learn. I know now I won't make the mistake again. And for those of you that are unaware, Google's Serverless option is called cloud functions. And it is just kind of slow, to put it lightly.

I created a very basic Node.JS function that used the Sharp library to resize images. But I ended up trying that out and it took around 2 seconds to load. And in 2022 that is kind of slow as turtles.

At first, I thought this is just what I deserve for using a turtle language like NodeJS, and I was contemplating switching over to GoLang or Rust (well talking about Rust and Serverless, I have an article about rust and serverless: Deploy Rust Applications To AWS Lambda), see if it would be faster. But the thing is, I was using Sharp, and Sharp has native bindings underneath the hood for like C or C++ or something, so it should have been fast and it wasn't. And after further investigation, I found the true culprit was not NodeJS, but it was a cold start, which, if you're unaware, is just a jargon term for when your function is called for the very first time and it takes a little bit to initialize and spin up. And before your code actually gets executed, Google Cloud has to create a node process and parse like your dependencies and all that little stuff, and so it takes a little bit of time. In my case, the cold start was consistently taking over a second, and my actual code was only running for like 100 to 400 milliseconds depending on the size of the image.

I looked online to see if this was normal and found someone who documented the range for cold starts for Google Cloud, and it's kind of standard for cloud functions to be this slow for Node.JS, in other languages are actually even slower, but if you compare this to AWS lambda, you find out Google Cloud functions just kind of suck.

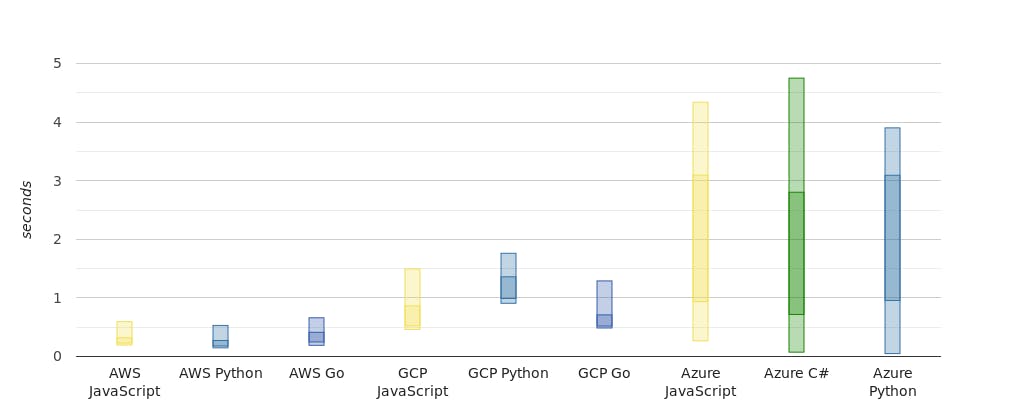

For NodeJS, the Cold start for Lambda is anywhere between zero 2 seconds to .6 seconds compared to cloud functions, which were at one to 4 seconds. If you're wondering, Azure, is just as bad. Here's a chart comparing lambda versus cloud functions versus Azure, and Azure is kind of just as bad when it comes to cold Start with cloud functions, except it can randomly be way worse.

For NodeJS, the Cold start for Lambda is anywhere between zero 2 seconds to .6 seconds compared to cloud functions, which were at one to 4 seconds. If you're wondering, Azure, is just as bad. Here's a chart comparing lambda versus cloud functions versus Azure, and Azure is kind of just as bad when it comes to cold Start with cloud functions, except it can randomly be way worse.

I was shocked to see Lambda winning by such a big margin, and it's not exactly competitive either, so I have no idea who is using Google Cloud Functions and Azure, you need to switch ASAP. And of course, once I learned that it was a no-brainer to switch over to lambda, so I took five minutes porting over my code and then the next ten weeks configuring API gateway, and then I was ready to give it a try. Also, it's probably worth noting I didn't use that one-click AWS image service handler thing I mentioned earlier that basically spins up the entire infrastructure for you because I have my images on Google Cloud Storage and not an S3. And that was actually for a very good reason because I tested out both and Google Cloud Storage just gave me better upload speeds than S3, so I actually did want my images over there.

If we look at the memory used, like my functions not using any memory, but actually as you increase the memory of these functions, it also increases the CPU which helps with cold start and also just helps with resizing the image itself. I got excited when I saw these results because my goal is to get something on average to be less than 500 milliseconds and it does exactly that. Unfortunately, my method for testing wasn't exactly very scientific and I think most of these calls if not all of them, were warm function calls and I haven't really talked about that. But after you call a function for the first time we talked about what's going to be a cold start and it's going to initialize. But then after that, the function becomes warm for like 15 minutes or so and it doesn't have to be initialized and so it's a lot faster and that's what we're seeing.

What I did next to get better information is to turn on tracing with lambda and this could be done through X-Ray. And so now I have basically traces of the lambda calls that I can look at and actually you can see what part of it was a cold start and which part of it was actually the function call is called.

I believe in practice if you have a consistent amount of requests that are just coming in and it's not like all at once or something and it's more of like this nice and even then you have fewer cold starts. But that is something that I'm still needing to test out. WS also has this thing called provisioned concurrency, which you can pay extra to keep on X functions and keep them warm. So let's say you have like 30 requests that happen per second and you know you're going to get it. Then maybe you can provision like 30 functions that will always be on and be ready.

And this is the part that I don't really understand, because if you have consistent traffic or you know, kind of around the number of requests that you're going to get, why are you even using Serverless in the first place? Why not? I just use a regular server. It seems to me that if your API is only good when the functions are warm and you're provisioning functions to make sure they're warm, you're kind of just missing the whole point behind Serverless and that you can scale up or scale down at a moment's notice. But the thing is, to take advantage of that, you have to be okay with cold starts.

And that's kind of tough because it kind of feels like you're just handicapped when it comes to performance. I've seen a bunch of stuff that serverless experts recommend doing and not doing to reduce the effect of cold start, and you can go down that path and you can try optimizing those things, but I'm over here thinking on a whole nother level. Do you know what I want? I'll tell you what I want. This is big brain time, okay?

I want my serverless functions to never cold start, not even once. And next thing you know, I'm not using Serverless anymore. I'm spinning up a thick Kubernetes cluster, getting 100% warm requests. And you know what? It feels amazing not having to wait 500 milliseconds before your code can even execute.

Or I have option two for you, which is Cloudflare Workers, which boasts on its website to have zero milliseconds of the cold start time. I did play around with creating a worker to resize images, and I kind of got close to it working, but it uses a different runtime than Lambda, so there are some limitations. You can't use nodes or libraries that have native bindings like Sharp. So I ended up trying out this web assembly example to resize images. That was kind of jank, to be honest.

And it kept rebuilding the web assembly code for some reason, so I wasn't too confident in it. Plus, I saw on the pricing page that even if you start paying money you can only get up to 50 milliseconds of CPU time per request, which I didn't think would be enough for resizing images. So Cloudflare Workers didn't seem like a good choice for my particular use case. But it is something I want to try out in the future if I get something that fits in with the limitations. Because Zero milliseconds of cold start time is something that I want in my life, but there you go.

That's pretty much why I haven't been too keen to convert everything that I do over to Serverless. And I'm not quite sure what I'm going to do with my image resizer on Lambda if I'm just going to keep it there. What I might do is just leave it for a little bit and see, under normal usage, what percent of requests are cold starts versus warm, and then I'm going to be sticking a CDN in front of it anyway, so it's okay if a lot of the request is slow. And yeah, I mean, there's a good chance that after a little bit, I and Serverless are just going to be friends. If you like this article, please consider sponsoring me. If you have any questions, please ask them in the comments or on Twitter. Thanks for reading!